In my experience as a researcher, science is a long stream of failures, interrupted by rare and brief moments of something working. The trial and error inherent in research produces a steady stream of negative results: the frequent experiments that should take only a week, and months later, you give up because you just cannot get biology to cooperate. Negative results can also occur when you try to follow up on something exciting that is published by another group but cannot reproduce their findings. Sharing such knowledge can be very valuable and can save someone else months or years of effort. Alas, such sharing is uncommon.

Let’s set aside the instances where there is a need to contradict the findings of another scientist. In those cases, there is a legitimate fear of turning a colleague who reviews your grants and papers into an enemy.1 Instead, I would like to discuss the larger space of null findings that are simply cases of wrong hypotheses or tricky method development.

In 1979, Robert Rosenthal coined the term “file drawer problem” when describing the preference of researchers to submit positive results for publication while locking the negative ones in a file drawer.2 Analysis in the social sciences highlighted the level of self-censoring by researchers when it came to reporting the null studies,3 as noted by Mark Pelow in Nature News:4

Of all the null studies, just 20% had appeared in a journal, and 65% had not even been written up. By contrast, roughly 60% of studies with strong results had been published. Many of the researchers contacted by Malhotra’s team said that they had not written up their null results because they thought that journals would not publish them, or that the findings were neither interesting nor important enough to warrant any further effort.

In an excellent editorial, Ivan Oransky and Adam Marcus wrote,5

That bias exists for many reasons, from the human desire to go for big, splashy stories, to the fact that successful clinical trials sell more reprints. And the bias drives research: When scientists know they need positive results to get into the big journals, which in turn earns them grants, promotions, and tenure, they’ll be pushed in that direction. And it means that we need some serious efforts, and incentives, for publishing negative studies, to help balance out those directed at positive publications.

Over the past 20 years, many approaches have been tried to correct the imbalance. Some important progress has been made, but overall, the valiant attempts to unleash the sharing of negative results have not delivered.

There Is No Shortage of Venues for Publishing Negative Results, Just a Shortage of Submissions

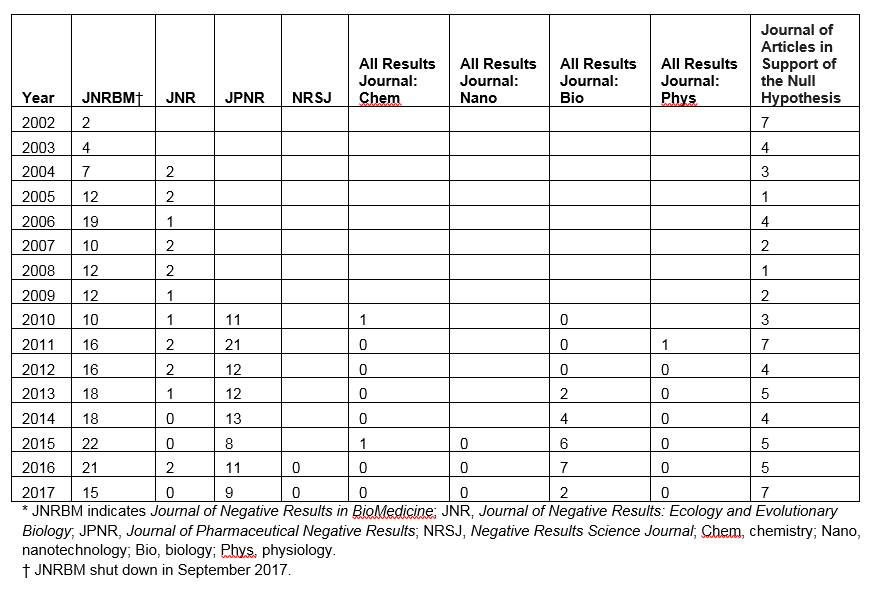

Because papers reporting negative results are cited much less than papers reporting positive ones,6,7 high–impact-factor journals tend to reject them.8 In response, many journals designed specifically for negative results have been launched. However, none have shown any real uptake (Table 1).

Table 1. Total number of papers published per year by each journal of negative results.*

It is telling that the most popular of these, the Journal of Negative Results in Biomedicine from Springer/BioMed Central (http://www.springer.com/biomed/journal/12952), ceased to publish in September of 2017.

In addition to journals explicitly dedicated to publishing negative results, the megajournal PLoS ONE was launched with such papers in mind, aiming to become the go-to place for these submissions. It did not happen, as Damian Pattinson, then editorial director of PLOS ONE, wrote in 2012:9

When PLOS ONE launched in 2006, a key objective was to publish those findings that historically did not make it into print: the negative results, the replication studies, the reanalyses of existing datasets. Although everyone knew these studies had value, journals would rarely publish them because they were not seen to be sufficiently important. PLOS ONE sought to become a venue for exactly these types of studies. As it happened, however, the submissions were not hugely forthcoming, although we have published a few.

In addition to journals, unlimited space is available for easy sharing of negative results on preprint servers and document repositories such as figshare and Zenodo. In 2012, I cofounded protocols.io, an open access repository for research methods and protocols. Even though protocols.io welcomes negative results with open arms, less than 1% of the public methods shared on this platform seem to fall into this category.

What If the Funder Strongly Encourages the Sharing of Negative Results?

In 2015, the Gordon and Betty Moore Foundation announced an $8 million initiative to bring genetic methods to marine microbes .10 These were high-risk grants focused on method development, with 100 labs working to get DNA into single-cell marine eukaryotes (protists) and perform genetic manipulation in different species.

The Moore Foundation also gave a grant to our protocols.io for the development of the online protist network (PROT-G) to support their researchers in collaborating and sharing experimental progress. One explicit goal of our grant was to “create an open and engaging virtual environment for sharing positive and negative results with active discussion groups that facilitate the exchange of ideas, tools, and techniques.”

We worked very closely with the community and did everything we could to encourage the sharing of negative results along with the positive ones. While we had much success in facilitating collaboration, discussion, and rapidly sharing positive results, obtaining the negative results turned out to be even harder than we anticipated. In our mid-grant progress report to the foundation, we mentioned three challenges, with the first and most serious being the negative results:

The protocols.io platform was born out of the desire to make it easy to share improvement and optimization to existing methods. We feel that the interface does a good job of allowing this information to be shared. However, most tweaks and changes to existing methods do not result in improvements. Knowledge around what was tried and failed is also extremely useful for the community, however, it is unclear how to present this information and how to encourage scientists to share it.

The PROT-G group is now two years old, and of the 150 methods shared there, fewer than 5 reported negative results. This is a collaborative community of researchers, easily sharing on an online platform that welcomes negative results, with strong encouragement from the funder. Yet, although we see plenty of sharing of negative results and difficulties in the discussion sections on the platform and in virtual conversations and in-person meetings among researchers, on the more formal reporting side, there is an overwhelming preference for sharing positive results.

If Your Goal Is Scientific Progress, Pursuing a Negative Result Can Be Distracting

Trying to understand the reservations of the protocols.io users about sharing negative results, we surveyed the protist researchers working on the Moore Foundation–funded effort. The responses were illuminating. Multiple senior and junior scientists told us a variant of the same concern.

You have a grant to try and tweak different existing techniques to introduce DNA into a specific protist species. You may be trying five different methods and varying dozens of steps in each one. Over the course of the year, you may have tried a hundred or more slightly different approaches, with only one of them working. The one that finally worked—you test it over and over, do proper controls, assess how robust it is. If it holds up, you share it with the community.

However, what do you do with the tentative negative result? Is it truly negative? You did not attempt it multiple times. Would it work in someone else’s hands? Very possibly. Would it work in a closely related species, even if it does not work in yours? Often that is the case. The lack of confidence is a serious problem because as scientists, we set a high bar for reporting a finding. We hold ourselves to a standard, and it is very hard to push tentative results up to that level. The amount of work and time required to repeat and pursue each tentative negative variant is such that it would likely preclude ever finding the technique that actually works.

Discussing this concern with our graduate student and postdoctoral advisors at protocols.io confirmed this common obstacle, which is not specific to protist genetics method development. Whatever project you are passionate about and working on, you have a question or hypothesis and choose tools that are likely to help you answer it and make progress toward the answer. As you try previously reported techniques from other groups, you often do not obtain the same results. Then you are stuck—was the other group wrong, or is it you? Did you miss an important step in their protocol? Is the difference a consequence of ozone levels or different pipette tips in your lab? Do you spend the next year chasing the details of the previously published work, or do you set it aside and try another way to get closer to the answer you seek? (Also see Arjun Raj’s, “Why there is no Journal of Negative Results.”11)

Negative Results Are Important, but We Need to Rethink Our Approach to Them

The past five years of working on protocols.io have been educational and have given me a new appreciation for the many forces conspiring against the sharing of negative results. I still believe that they are important and that sharing them effectively can reduce the redundant effort and rediscovery that is common in science. As I have written before,12 PLOS ONE has done a tremendous service to the research community by welcoming negative results from those who take the time to write them up. The problem is that most scientists will not write such papers.

It would be a disservice to science to push researchers into writing up tentative results on failures that may not be failures at all. If we want greater reproducibility in science, such a push would likely backfire. Nor do I think it is wise to steer scientists into more effort and work on techniques and approaches that seem to be unsuccessful at the expense of chasing the answers they actually dream about finding.

We can do better in facilitating sharing and collaboration, including the sharing of negative results. It will require brainstorming creative solutions by scientists, publishers, and funders, but it will not take the form of a sudden explosion of submissions to a journal of negative results.

References

- Raj A. Social networks and self-censorship in science. 17 March 2014, http://rajlaboratory.blogspot.com/2014/03/social-networks-and-self-censorship-in.html.

- Rosenthal R. The file drawer problem and tolerance for null results. Psychol. Bull. 1979; 86:638–641. http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.900.2720&rep=rep1&type=pdf.

- Franco A, Malhotra N, Simonovits G. Publication bias in the social sciences: Unlocking the file drawer. Science. 2014;345:1502–1505. https://doi.org/10.1126/science.1255484.

- Peplow M. Social sciences suffer from severe publication bias. Nature News, 28 August 2014, https://doi.org/10.1038/nature.2014.15787.

- Oransky I, Marcus A. Keep negativity out of politics. We need more of it in journals. StatNews, 14 October 2016, https://www.statnews.com/2016/10/14/journals-negative-findings/.

- Leimu R, Koricheva J. What determines the citation frequency of ecological papers? Trends Ecol. Evol. 2005;20:28–32. https://doi.org/10.1016/j.tree.2004.10.010.

- Etter JF, Stapleton J. Citations to trials of nicotine replacement therapy were biased toward positive results and high-impact-factor journals. J. Clin. Epidemiol. 2009;62:831–837. https://doi.org/10.1016/j.jclinepi.2008.09.015.

- Matosin N, Frank E, Engel M, Lum JS, Newell KA. Negativity towards negative results: a discussion of the disconnect between scientific worth and scientific culture. Dis. Models & Mech. 2014;7:171–173, https://doi.org/10.1242/dmm.015123.

- Pattinson D. PLOS ONE launches reproducibility initiative. EveryONE. 14 August 2012, http://blogs.plos.org/everyone/2012/08/14/plos-one-launches-reproducibility-initiative/.

- Gordon and Betty Moore Foundation announced an $8 million initiative to bring genetic methods to marine protists. 12 November 2015, https://www.moore.org/article-detail?newsUrlName=$8m-awarded-to-scientists-from-the-gordon-and-betty-moore-foundation-to-accelerate-development-of-experimental-model-systems-in-marine-microbial-ecology.

- Raj A. Why there is no “Journal of Negative Results.” 26 June 2014, http://rajlaboratory.blogspot.com/2014/06/why-is-there-no-journal-of-negative.html.

- Teytelman L. How PLOS ONE helps science get closer to the self-correcting ideal. 14 March 2017, https://www.protocols.io/groups/protocolsio/discussions/how-plos-one-helps-science-get-closer-to-the-selfcorrecting.

Lenny Teytelman is CEO of protocols.io.