Abstract

The American Society of Civil Engineers publishes 34 technical journals in all civil-engineering sub-disciplines. The society selfpublishes more than 28,000 pages a year with a relatively small staff, and thus, the journals’ editorial boards were accustomed to acting independently, with little oversight. In the early 2000s, it was common for the time from submission to publication to extend to over 2 years. Basic turnaround time reports were available but left editors and journal management staff with little detailed information on where bottlenecks were occurring. New reports developed in 2011 and enhanced in 2012 were more specific and helped to achieve faster decision making. This paper serves as a case study of how to affect volunteer-editor behavior by providing detailed reporting. New behavior included increased frequency of logging in to the system, calls to action to subeditors or associate editors, changes in the amount of time given to reviewers, changes in the number of associate editors used, and heightened awareness of nonperforming editors and associate editors.

Introduction

The American Society of Civil Engineers (ASCE) has a rich history of publishing technical content. Transactions became journals of the different technical divisions, which have become the individual journal titles that we have today. The modern journals publishing program was formed largely when the society moved from New York to Reston, Virginia, in 1997. At that time, there were 25 journals.

Originally, the publishing program was entirely a paper process in which editors received submissions directly from authors and ASCE staff had little or no control over manuscript processing. A manuscript tracking system, RMTS, was used, and staff attempted to centralize the mailing and coordination of the journals. It was only minimally successful inasmuch as the process still used paper manuscripts and mail.

Reporting from the tracking system was inadequate for determining the amount of time that review was taking, and the editors were still receiving submissions to their own offices. In 2005, ASCE began using Aries’ Editorial Manager online-submission and peer-review tracking system. The first journal to use the new system went live in October 2006, and the final one went live in October 2008.

Even with a stronger reporting tool, staffing levels and concentration on streamlining the process still left little time for “managing” the editors and their performance. The tool did, of course, drastically reduce staff processing time for manuscripts and eliminated mailing time.

Turnaround Times

The average time from submission to acceptance is used by authors and readers to determine the quality and relevance of a journal.1 The perception of immediacy of content varies by discipline. Medical journals, for example, have a need to publish conclusions as quickly as possible, and the material is published and cited almost immediately. Papers in civil engineering hardly reach their peak citations much before 5 years after publication.2

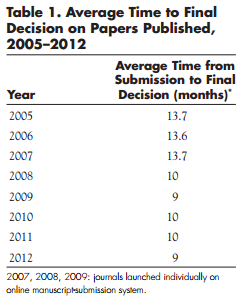

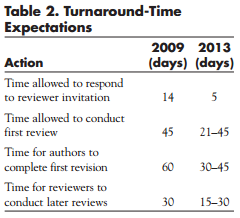

In 1992, the ASCE Board Publications Committee decreed that ASCE journals should have less than 5% of papers out for review for more than 6 months. Ideally, all papers were to be reviewed within 90 days (3 months) from submission to final decision. Those goals were not being met (Table 1) even after the online submission system was introduced. ASCE also had allowed long review and revision times, which stretched out the turnaround times (Table 2).

In 1992, the ASCE Board Publications Committee decreed that ASCE journals should have less than 5% of papers out for review for more than 6 months. Ideally, all papers were to be reviewed within 90 days (3 months) from submission to final decision. Those goals were not being met (Table 1) even after the online submission system was introduced. ASCE also had allowed long review and revision times, which stretched out the turnaround times (Table 2).

It is important to note that the chief editors and associate editors are not compensated for their work on the journals. Editors can request financial assistance to cover administrative assistance costs. Associate editors receive no financial support.

Editor Perceptions

When the editors were asked in their board meetings or at the annual ASCE Editors’ workshop about the turnaround time, they overwhelmingly reported that slowness was the fault of the reviewers. The editors insisted that 45 days was the appropriate amount of time to give reviewers to conduct an initial review, but the editorial coordinators who were processing manuscripts daily suspected otherwise. More detailed information was needed. Auto-generated reports from the online submission system showing average turnaround times were not specific enough to pinpoint the bottlenecks.

When the editors were asked in their board meetings or at the annual ASCE Editors’ workshop about the turnaround time, they overwhelmingly reported that slowness was the fault of the reviewers. The editors insisted that 45 days was the appropriate amount of time to give reviewers to conduct an initial review, but the editorial coordinators who were processing manuscripts daily suspected otherwise. More detailed information was needed. Auto-generated reports from the online submission system showing average turnaround times were not specific enough to pinpoint the bottlenecks.

Methods

Editor and Reviewer Performance Report

In August 2011, the online-submission administrator (the second author of this paper) began collecting individual pieces of information on each journal. These can best be described as snippets, and they included

- Staff time—Average days from submission to chief editor (CE) assignment.

- CE time—Average days for CE to assign subordinate or associate editor (AE).

- CE or AE time—Average days from submission to first reviewer invitation.

- Reviewer time—Average days for reviewer to respond to invitation.

- Reviewer time—Average days for reviewer to complete review after accepting invitation.

- AE time—Average days for AE to recommend a decision after all required reviews are received.

- CE time—Average days for CE to render final decision after AE recommendation made.

- Number of late reviews.

- Number of early and on-time reviews.

Adding all those snippets does not yield the average time from submission to first decision. There are overlaps (e.g., time from submission to first reviewer invitation includes staff time and CE-assignment time).

Results

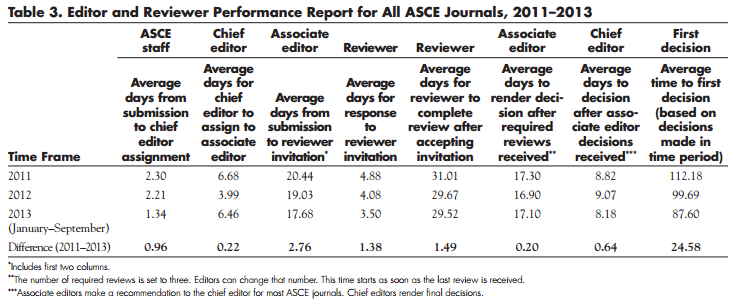

Although the editor and reviewer performance report is not a linear or chronological analysis, it does provide specific information about where the bottlenecks are on a journal-by-journal basis. The report (Table 3) covers January 2011–September 2013. The initial report, presented in 2011, showed that the reviewers were responding to invitations and completing their reviews much faster than expected (4.88 days). However, it also showed the CEs were exceeding the turnaround-time expectations for assigning AEs (6.68 days), and the AEs were not meeting the goals for assigning reviewers (Table 2). The entire average time from submission to assignment of the first reviewer was 20.44 days. Furthermore, once all required reviews were in, the AEs and CEs were taking a long time to render decisions (17.30 days and 8.82 days, respectively).

Sharing the Reports with the Editors

In September 2011, the initial numbers were shared with the Editorial Board of the Journal of Environmental Engineering at its annual meeting. As the editors were discussing the turnaround time, they lamented that the reviewers were taking too much time. The journals director (the first author of this paper) was at that meeting and shared what the initial report stated. On the average, it was taking the CE 13.2 days to assign papers to the AEs. The average time from submission to reviewer invitation was 34.8 days, showing that the AEs were also slow to assign reviewers. It was taking the AEs 18.54 days to make their decision recommendations after all reviews had been submitted. For this journal, the reviewers were given 30 days to complete their initial reviews; on average, the reviews were done in 22.4 days.

The data presented to the editors clearly showed that the bottleneck was with them, not the reviewers, who were performing better than expected. The editors made several decisions at the meeting to decrease their turnaround time:

- The CE vowed to log into the system more often to assign papers to AEs. The AEs confessed that when they get five or six papers at once, they let them sit; if they were getting only one or two at a time, they felt that they could address them more quickly. This was valuable feedback that the CE needed.

- The CE made the AEs raise their hands (literally) and pledge to move papers along faster. Asking for a group commitment in light of the performance numbers held them all accountable.

- The CE requested that the report be regenerated in 3 months to see if there had been improvement.

- The board decided to decrease the time for completion of reviews to 21 days.

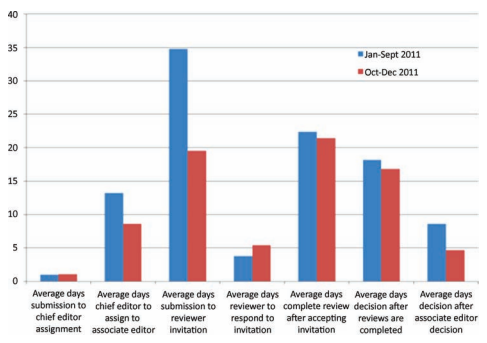

In accordance with the CE’s request, the numbers were pulled again in 3 months, and the board did shorten their review times (Fig. 1). The CE reduced his time for assigning papers from 13.2 days to 8.6 days. The time from submission to reviewer invitation dropped from 34.8 days to 19.5 days. The AEs reduced their time to make recommendations from 18.17 days to 16.8 days. The CE reduced his time for rendering a final decision from 8.6 days to 4.7 days. All together, nearly 25 days were shaved from the overall processing time in the span of 3 months. The editors continued to decrease their turnaround times, and times were even better in 2012.

Overall Response to Performance Report

The reports are provided to the editors twice a year: January–June data in July and January–December data in the following January. The editors receive only their own journals’ data and the average data for all 34 ASCE Journals.

Initially, although the CEs appreciated the granular data, some were not convinced that they could reduce reviewer time, and others were concerned about putting pressure on their AEs. The CE of the Journal of Environmental Engineering shared his experience in reducing the turnaround time on the basis of these data as well.

Some of the editors voiced reluctance to change behavior. Responses included the following:

- Unwillingness to increase pressure on the AEs to perform faster

- Unwillingness to log into the system more often (citing competing priorities)

- Unwillingness to reduce the amount of time given to reviewers

A few changes were made in editorial processing by staff in light of the data. Even for journals that were giving reviewers 45 days to turn in reviews, the average time for a review was 32–38 days. That coincided with automatic reminders in the system. It seemed clear that reviewers were completing their reviews shortly after getting the first reminders. The reminder e-mails were changed so that the first reminder arrived earlier. Over the course of the year, average reviewer time decreased by nearly 1.5 days, and an argument was presented to individual boards that their reviewers were already reviewing in under 30 days. Several editors were then ready to change the review time to 30 days. One journal has decreased the time to 21 days.

Despite their stated reluctance to change, the editors managed to reduce the average time to first decision by 12.49 days in the 7 months after the 2011 editors’ workshop (Table 3, 2012 data).

We continued to see improvements in 2012 and 2013. Since the report was created, the average time to first decision has decreased by 24.58 days. Other factors that have played into the decrease in the average time include the following:

- A few new editors who have better performance statistics

- A greater focus on turnaround time by ASCE editorial staff

- Changes in the reminder structures

Other Report Changes

Several reports were designed or tweaked starting in 2012 to highlight the oldest papers for the editors of the journal. The monthly late-list report, showing all papers in review that were more than 90 days old, was typically an unsorted Excel table sent as a PDF. The editors had no ability to sort or play with the data. The report was reformatted by staff, sorted according to how old the papers were, and color coded. An Excel version is now sent to the editors with notes about the papers in most urgent need of attention added to the body of the e-mail, as opposed to just being sent as attachments; this increases the visibility of problem papers and gives the editorial coordinators a better idea of which papers need follow-up.

Discussion

Volunteer editors undertake large responsibilities for overseeing the peer review of scholarly journals. Publishers of the journals are struggling to remain competitive while being mindful of the pressures put on volunteer editors. In our case, anecdotal evidence from the editors regarding the bottlenecks in processing time was inaccurate.

Manuscript-submission systems are increasingly sophisticated with respect to generating data reports. Many systems provide “canned” reports that show high-level statistics that are useful in some types of reporting; however, it is difficult to track specific concerns by reviewing only canned reports.

The Editor and Reviewer Performance Report has completely changed the conversation at editorial board meetings. The CEs and their AEs have data that show where the bottlenecks are in the process. Arming the editors with this information is critical for allowing them to do what they do best: solve problems.

From the publisher perspective, having these reports and more granular data has highlighted weaknesses in the process. More attention is being paid to late papers, and coordinators are more aggressive in contacting editors who appear to be struggling to keep up. Journal managers who perform a more detailed analysis will have data to either support or refute editor perceptions on their performance activity. Furthermore, by providing specific turnaround times for smaller pieces of the tasks involved with peer review, the editors have data for valuable discussions about how to manage the workload.

References

- Snodgrass R. Journal relevance. SIGMOD Record. September 2003. 32(3):11–15.

- Thomson Reuters. 2011 Journal Citation Reports, Science Edition. 2011.

ANGELA COCHRAN is director, journals, and LIZ GUERTIN is manager of journals systems administration of the American Society of Civil Engineers, Reston, Virginia.