MODERATOR:

Anna Jester

Vice President of Sales & Marketing

eJournalPress

Wiley Partner Solutions

SPEAKERS:

Mary K Billingsley

Managing Editor

American Academy of Child and Adolescent Psychiatry

Christine Beaty

Director of Journal Operations

American Heart Association

REPORTER:

Donna Tilton, ELS

American Journal of Sports Medicine

How would someone describe you? We are all categorized in different ways at different times and for different purposes. If your publication or organization is considering ways to incorporate demographic data from your stakeholders, one of the main takeaway points from this session is that self-reporting is key; that is, allowing the person to choose their own labels and definitions.

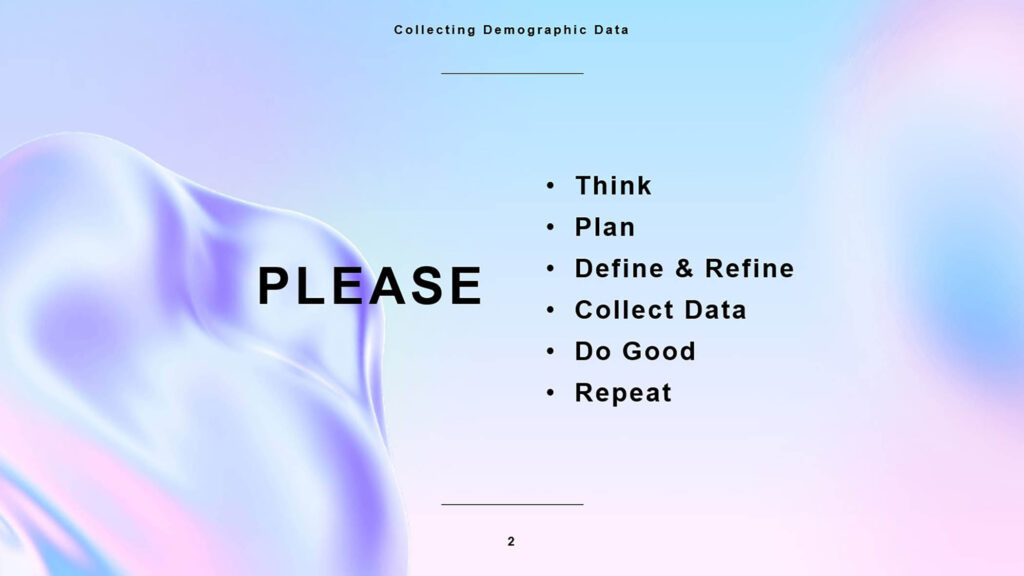

Anna Jester, the moderator for this session, emphasized from the start that organizations looking to gather demographic information should follow these steps: Think, Plan, Define and Refine, Collect data, Do good, Repeat.

Mary Billingsley, Managing Editor, American Academy of Child and Adolescent Psychiatry (AACAP) and Christine Beaty, Director of Journal Operations, American Heart Association (AHA), then shared their experiences with collecting and using demographic information.

Think: Why Are You Collecting the Data?

Both the AACAP and AHA started with overall commitment goals at the society level. In tandem with AACAP initiatives, journal senior leadership published a statement in 20201 committing to an antiracist vision for journal operations with specific initiatives and goals. The AHA established a 2024 Impact Goal2 to advance cardiovascular health for all, including identifying and removing barriers to health care access and quality. As part of this goal, the journals committed to assess the diversity of authors, including those writing editorial commentaries.

Plan: What Are You Going to Do?

Journals need to collect demographic data from authors, reviewers, and editors to identify gaps and measure change over time.

The AHA journals created an Equity Diversity Inclusion Editorial Board3 to help the journals to fulfill the commitment to health equity in the content of the journals and to increase diversity among the author and reviewer pools by establishing benchmarks and evaluating progress. As an editor for an AHA journal stated: “What gets measured, gets done.”

Define and Refine

The AACAP journals began collecting user demographic data in 2020 using a schema adapted from other psychiatry publications, have used that data to report aggregated information about the makeup of the editorial boards, and have begun using the data to benchmark author and reviewer activity. The journals are planning more in-depth analyses of manuscript decisions and the review process to identify biases. At the same time, plans are moving forward to align demographic data collection across the society—journals, meetings, membership, etc.—using a combination of the Joint Commitment schema and GuideStar/Candid schema to help refine the questions they asked regarding options for race and gender identities. They are setting clear goals and objectives that will be transparent to users so that they know why the data is being collected and how it will be used. These goals and objectives will be continuously reviewed and adjusted as needed.

Collect Data

This was the meat of the discussion, with many audience questions regarding specific reporting from different editorial systems. Both presenters described adding mandatory self-reporting fields in the profile area of their manuscript tracking systems.

The AHA journals had been collecting data on the editorial teams for several years and reporting to the publications committee. With the 2024 Impact Goal announcement, they added more detailed collections on authors and reviewers. In the editorial management system, the demographic fields are required, and users are required to update their answers once a year; but of course, the option of “prefer to not answer” is always available.

The basic principles in data collection include: providing clear information on consent and confidentiality and what the purpose and intended use of the data are, providing multiselect check boxes and open-ended questions as well as “decline to answer” option, treating the data with sensitivity and confidentiality, and reporting regularly.

Do Good

The ”easiest” place to start is the journal masthead, where you can identify self-reported demographics from editors and editorial board members to identify underrepresented groups and develop programs to increase diversity and equity. But the AACAP journals were also able to look at data on reviewers and authors to consider questions such as whether women were declining reviews disproportionately during the early months of the COVID-19 pandemic. The collected data showed that women were being invited as often as men and were not declining reviews more often compared with the same time frame in the year before the pandemic. Information on authors facilitated a limited analysis of acceptance rates and whether the call for papers on antiracism attracted new authors.

The AHA editorial office has created a report based on standard report parameters that can also be filtered by role (e.g., authors, reviewers, editors), which provides aggregated demographic data as overall numbers and percentages. What do they do with this data? Data are presented to editors multiple times a year to help them evaluate their progress. Each calendar year, the data are reviewed to evaluate editor goals and reports are made to the AHA board on the goals. Public data are provided on the journal websites. Data are presented in aggregate across the entire portfolio of journals regarding the editorial team and invited author and reviewer diversity.

Repeat

Christine Beaty reported that for the AHA journals, progress is visible but slow. They now have open calls for associate editor positions and offer flexibility in workload to allow younger, early-career members to participate. Editors are reminded to seek out authors and reviewers outside their usual networks.

For some medical specialties, the plan may need to be adjusted to set reasonable goals, like trying to reflect the community rather than forcing diversity that doesn’t yet exist. Organizations should also actively avoid overburdening vulnerable colleagues who may be repeatedly asked to fill these gaps.

After the presentations, there was a lively discussion with audience questions on how to extract reports from Editorial Manager and how this data collection and reporting affect staff workloads. Questions of privacy and confidentiality were also raised. Journals should be careful in how they handle this data and train staff who have access.

Collecting and controlling data on your editors, authors, and reviewers is still developing, and many journals are just starting the process. How these data are collected and how they can be used to meet the goals of scholarly publications will continue to be a topic of discussion. CSE has a list of DEI Scholarly Resources on the website to help you keep up to date: https://www.councilscienceeditors.org/dei-scholarly-resources