Copyediting is a fundamental part of the publication process. It can be performed before a manuscript is submitted for peer review or afterwards. The relationship between copyediting and the submission outcome (i.e., acceptance to a journal or conference) is not well understood. To discern the value of copyediting in relation to the review process, I examined peer reviews of manuscripts submitted to a large scholarly conference and surveyed the frequency of terms or phrases in reviewer comments that were associated with copyediting (e.g., “poorly written,” “wordy,” “typo”). I also sought to determine whether the frequency of positive, neutral/unknown, or negative copyediting terminology was correlated with submission outcome (reject and different types of accept).

Background

Not all researchers are gifted in writing as well as their fields of expertise. Thus, copy editors are sometimes hired to refine a manuscript prior to its submission for peer review. Alternately, some researchers will copyedit their papers themselves. Various scholarly journals also employ copy editors to review and edit articles prior to publication; in this case, copyediting takes place after peer review has been completed and an acceptance decision has been rendered. What is the purpose of the copyediting process? The Society for Editors and Proofreaders states “the aim of copy-editing is to ensure that whatever appears in public is accurate, easy to follow, fit for purpose and free of error, omission, inconsistency and repetition.”1 Among the various types of copyediting is substantive, or content, copyediting whereby a copy editor is concerned with the overall structure, organization, and presentation of the ideas in a document. A copy editor may also be responsible for ensuring proper grammar and usage—this includes (and is not limited to) establishing consistency in terminology and abbreviations, optimizing word choice, and reducing ambiguity. The mechanical/proofreading aspect of copyediting comprises the review of punctuation, spelling, and labeling, and it may include the correction of typographical errors. Finally, a copy editor may review citations and cross-references and also fact-check when appropriate.

Some previous studies have analyzed the impact of copyediting on articles and papers. Vultee2 studied how editing may affect audience perception of news articles and found that editing had a significant positive effect. Copyedited articles were rated higher in terms of impressions of professionalism, organization, writing, and value.2,3 Wates and Campbell4 examined the copyediting function using author versus publisher versions of articles and tracked the changes that occurred between the initial and final versions. They found that 42.7% (n = 47) of the changes were related to incorrect or missing references; 34.5% (n = 38) were typographical, grammatical, or stylistic; 13.6% (n = 15) regarded missing data; 5.5% (n = 6) were semantic; and 3.6% (n = 4) aligned the articles with journal-specific conventions.4,5 Overall, Wates and Campbell4 assessed that copyediting was an important function and it yielded greater article accuracy and integrity.

In the case of peer review, reviewers’ perceptions of journal and conference submissions may be negatively affected by a lack of thorough copyediting; or, their perceptions may be elevated if careful copyediting has been performed. This article describes a study of the relationship between copyediting comments by reviewers and the eventual outcome of submissions under peer review.

Methods

The dataset used for this study comprised a large set of peer reviews of scientific papers from a popular computer science conference. The reviews were accessed from OpenReview.net, a website containing publicly available papers and reviews from many scientific conferences and journals (mainly in the computer/information science domain).9 In an effort to promote openness in scientific communication, OpenReview is open access and open source, and it uses a cloud-based web interface and database to store manuscripts and reviews.9 This study sourced 2,757 reviews of 913 submissions to the 6th International Conference on Learning Representations (ICLR 2018); this conference is dedicated to advancements in the deep learning branch of artificial intelligence.10 All submissions had at least three reviews, and a small number (n = 18) had four reviews.

To detect whether a reviewer had made a reference to copyediting, I first consulted various sources that define the scope of a copy editor and subgenres of copyediting.6–8 I then compiled a list of 163 terms and phrases that encompass the various duties of a copy editor (or the concepts that one is concerned with when copyediting his/her own paper). These terms and phrases have a positive, negative, or neutral/unknown tone associated with them, and I grouped them as such. For example, a reviewer who uses the phrase “is clearly written” is probably complimenting the author (i.e., positive tone). An example of a review comment with a negative tone is the word “reorganize”; this would most likely not be used unless the reviewer was requesting that the author change the structure of the paper to improve it. Lastly, if a term such as “consistency” is noted in a review, it is unclear if this is a positive or negative statement without reading the review itself, so this would be counted under the “neutral/unknown” category. In addition to the tone categories, I further grouped the terminology into categories per type of editing and subtype (e.g., “substantive/content” → “accuracy”; see Appendix 1).

To ensure that the terms and phrases were not dually counted, each term or phrase was unique and not a fragment of a larger phrase. For example, the word “clear” could not be included (by itself) because the “clear” count would include all instances of “not clear” and “clear” combined. It was essential that the positive and negative terms did not overlap. The use of longer and more specific phrases (i.e., “is clearly presented” versus “not clearly presented”) allowed for the results to be interpreted more accurately. See Appendix 1 for a complete list of terms/phrases and editing categories.

Text-mining methods were applied to the OpenReview application programming interface to obtain the number of occurrences of terms and phrases from the predetermined list (Appendix 1) per review and the final paper outcome tied to the review: accept as oral presentation (2.5% of submissions; n = 23), accept as poster (34.3%; n = 313), invite to workshop (9.8%; n = 89), and reject (53.5%; n = 488).11 To increase the hit rate, all terms were lowercased and all punctuation and hyphenation surrounding the terms were removed (e.g., “well-polished” became “well polished”).

Results

Across the review set, 10,111 instances of copyediting terms or phrases from the predetermined list were identified; of those identified, 666 instances were positive, 2,564 were negative, and 6,881 were neutral/unknown in their nature. In addition, 83.4% of peer reviews contained one or more of the terms/phrases: 21.6% contained positive, 46.6% contained negative, and 70.3% contained neutral/unknown. Statistical analysis of these instances and their relationship to the outcomes of the review process are detailed below.

Most Frequent Copyediting Notations

The copyediting terms and phrases that appeared most frequently in the ICLR 2018 peer reviews (with 100 or more occurrences across the full dataset) are listed in order of descending frequency in Table 1.

| Table 1. Most frequently used copyediting terms and phrases (with 100 or more instances in the review dataset). Also reported is the percentage of all reviews containing these terms or phrases. | |||

| Term or Phrase | Tone | Numbers of Notations | Percentage of All Reviews Containing Term or Phrase* |

| Figure | Neutral/Unknown | 1,167 | 22.52 |

| Table | Neutral/Unknown | 716 | 16.36 |

| Not clear | Negative | 698 | 17.77 |

| Unclear | Negative | 565 | 13.78 |

| Language | Neutral/Unknown | 462 | 9.03 |

| Appendix | Neutral/Unknown | 380 | 9.39 |

| Clarity | Neutral/Unknown | 363 | 11.10 |

| Fig | Neutral/Unknown | 332 | 6.75 |

| Explain | Neutral/Unknown | 321 | 9.25 |

| References | Neutral/Unknown | 287 | 8.31 |

| Is well written | Positive | 266 | 9.54 |

| Reference | Neutral/Unknown | 262 | 6.93 |

| Label | Neutral/Unknown | 253 | 5.30 |

| Labels | Neutral/Unknown | 248 | 5.55 |

| Semantic | Neutral/Unknown | 223 | 7.76 |

| Confusing | Negative | 218 | 7.91 |

| Typos | Negative | 201 | 7.29 |

| Notation | Neutral/Unknown | 200 | 7.25 |

| Figures | Neutral/Unknown | 191 | 6.93 |

| Clarify | Negative | 155 | 5.62 |

| Title | Neutral/Unknown | 122 | 4.43 |

| Is clear | Positive | 117 | 4.24 |

| Transition | Neutral/Unknown | 116 | 4.21 |

| Typo | Negative | 112 | 4.06 |

| Caption | Neutral/Unknown | 106 | 3.84 |

| Tables | Neutral/Unknown | 100 | 3.05 |

| *The percentage of reviews is not proportional to the number of notations; the same terms/phrases may have been used multiple times in a single review. | |||

These are elements that reviewers appeared to focus on, and it may be useful for authors to consider and review how they are handling these components and concepts before they submit their manuscripts for review. By tackling potential copyediting issues in advance, authors may save reviewers time and effort that they would otherwise spend identifying copyedit-related errors and allow reviewers to focus more on manuscript content.

Relationship Between Tone of Copyediting Terminology and Submission Outcomes

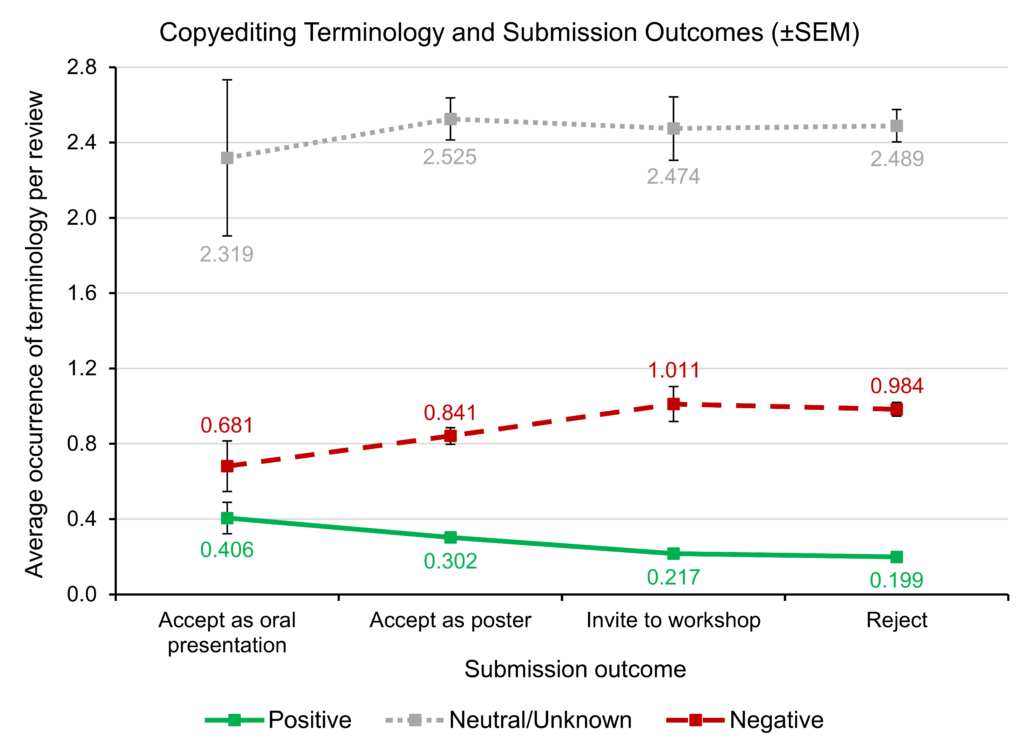

I also analyzed the relationship between the tone of terminology used in reviews and the outcome of the review process. Figure 1 displays the average occurrence of positive, negative, or neutral/unknown copyediting terminology across all four submission outcomes.

To have a manuscript accepted to ICLR 2018 as an oral presentation is the most desirable outcome, but it only applied to the top 2.5% of submissions. Interestingly, submissions with the highest frequency of positive copyediting terminology (0.681 instances per review) and the lowest frequency of negative terminology (0.406 instances per review) were those accepted as oral presentations. Conversely, manuscripts that had the lowest frequency of positive copyediting terminology (0.199 instances per review) were those that were rejected. As may be expected, the use of neutral/unknown terminology was most common and also largely unchanged across the four outcomes. The standard error of the mean (denoted by the error bars in Figure 1) was higher for the neutral/unknown tone category, as compared with the positive and negative groups; reviewer comments belonging to the neutral/unknown group may have ultimately been positive or negative in nature, which is a possible explanation for the increased variance observed in this group.

A multivariate analysis of variance (MANOVA) was performed using SPSS; testing reported statistically significant differences in terminology tone per submission outcome (F [9, 6695] = 5.003, P < 0.001, Wilks’ Λ = 0.984, ηp2 = 0.005). After Bonferroni correction, statistical significance could be accepted at P < 0.017. Specifically, there was statistical significance for the positive terminology group (P < 0.001) but not for the negative group (P = 0.028) or the neutral/unknown group (P = 0.962). Tukey HSD post-hoc tests applied to the counts in the positive group revealed significant differences in the occurrence of positive terminology between the outcomes of accept as an oral presentation (0.406 instances per review) and reject (0.199 instances per review), as well as between the accept as a poster presentation (0.302 instances per review) and reject (0.199 instances per review).

Summary of Results

This study demonstrated that terminology and phrases associated with copyediting appeared with regular frequency in peer reviews. In fact, 83.4% of peer reviews from ICLR 2018 contained one or more terms/phrases from the predetermined list: 21.6% contained positive items, 46.6% contained negative, and 70.3% contained neutral/unknown. Table 1 shows the terms and phrases (from the predetermined list) that appeared most often. Submissions that were accepted as oral presentations (the most desirable outcome) had the highest frequency per review of positive terms/phrases and the lowest frequency of negative terms/phrases; rejected submissions had the lowest frequency of positive terms/phrases (see Figure 1).

Conclusions

This study examined copyediting terms and phrases occurring in peer review comments and their relationship to the outcomes of the review process. There appeared to be trends tied to negative and positive copyediting terminology and the decision for a manuscript, with some statistically significant values. It is possible that these values achieved statistical significance because of the large sample size. Further research across additional peer review datasets could help establish whether these findings are more broadly generalizable. Future studies may benefit from a narrower set of terminology, including the use of fewer neutral/unknown terms or an exclusion of this “tone” altogether.

Peer reviewers commented most often about clarity, writing, word choice, exposition, figures/tables/appendices, labels, references, and typos/punctuation. Presumably, it would be beneficial to invest more time in polishing these aspects of a scientific article. Research content is fundamental to a manuscript’s consideration for acceptance to a conference or journal; still, acceptance may be boosted with careful copyediting. The fewer copyediting issues there are in a paper, the less time and effort reviewers will need to spend pointing these out—a win-win situation for everyone involved in the review process.

Acknowledgements

Thank you to Ryen White for his assistance with the initial text mining for this project and his statistical test recommendations.

References

- Society for Editors and Proofreaders. FAQs: What is copy-editing? [accessed 2018 Nov 26]. https://www.sfep.org.uk/about/faqs/what-is-copy-editing/.

- Vultee F. Audience perceptions of editing quality: Assessing traditional news routines in the digital age. Digital Journalism. 2015;3(6):832–849. https://doi.org/10.1080/21670811.2014.995938.

- Stroud NJ. Study shows the value of copyediting. American Press Institute. March 3, 2015. [accessed 2018 Nov 26]. https://www.americanpressinstitute.org/publications/research-review/the-value-of-copy-editing.

- Wates E, Campbell R. Author’s version vs. publisher’s version: an analysis of the copy‐editing function. Learned Publishing. 2007;20(2):121–129. https://doi.org/10.1087/174148507X185090.

- Davis P. Copy Editing and Open Access Repositories. The Scholarly Kitchen. June 1, 2011. [accessed 2018 November 26]. https://scholarlykitchen.sspnet.org/2011/06/01/copyediting-and-open-access-repositories.

- Reeder E. Three Types of Editors: Developmental Editors, Copyeditors, and Substantive Editors. New York, NY: Editorial Freelancers Association; 2016.

- Einsohn A. The Copyeditor’s Handbook: A Guide for Book Publishing and Corporate Communications, with Exercises and Answer Keys. Berkeley, CA: University of California; 2011.

- Stainton EM. The Fine Art of Copyediting. New York, NY: Columbia University Press; 2002.

- OpenReview. Amherst (MA): Information Extraction and Synthesis Laboratory, College of Information and Computer Science, University of Massachusetts Amherst. [date unknown]–[accessed 2019 Jan 29]. https://openreview.net.

- International Conference on Learning Representations. 2013–2019. [accessed 2019 Feb 12]. https://iclr.cc/.

- OpenReview Python API. OpenReview API Documentation. OpenReview Team Revision bac6adf3; 2018. [accessed 2019 Jan 29]. https://openreview-py.readthedocs.io/en/latest/.

Resa Roth is a Quality Systems Specialist with Bio-Rad Laboratories in Woodinville, Washington.

Appendix 1 is available for download here: https://www.csescienceeditor.org/wp-content/uploads/2019/06/Appendix-1.pdf.