MODERATOR:

Michael Friedman

Acting Co-Director of Publications and Journals Production Manager

American Meteorological Society

Boston, Massachusetts

SPEAKERS:

Heather DiAngelis

Manager, Journals Production

American Society of Civil Engineers

Reston, Virginia

Victoria Koulakjian

Content Production Manager, Journals

American Speech-Language-Hearing Association

Rockville, Maryland

Theresa Fucito

Senior Manager, Publishing Operations

AIP Publishing

West Babylon, New York

REPORTER:

Bethanie Rammer

Managing Editor, Communications

African Society for Laboratory Medicine

Addis Ababa, Ethiopia / Nashville, Tennessee

This session, moderated by Michael Friedman of the American Meteorological Society, was a follow-up to a 2018 Annual Meeting session,1 which explored a variety of journal metrics used by publishers and vendors. This session presented case studies of how production metrics were applied to address real-world production problems.

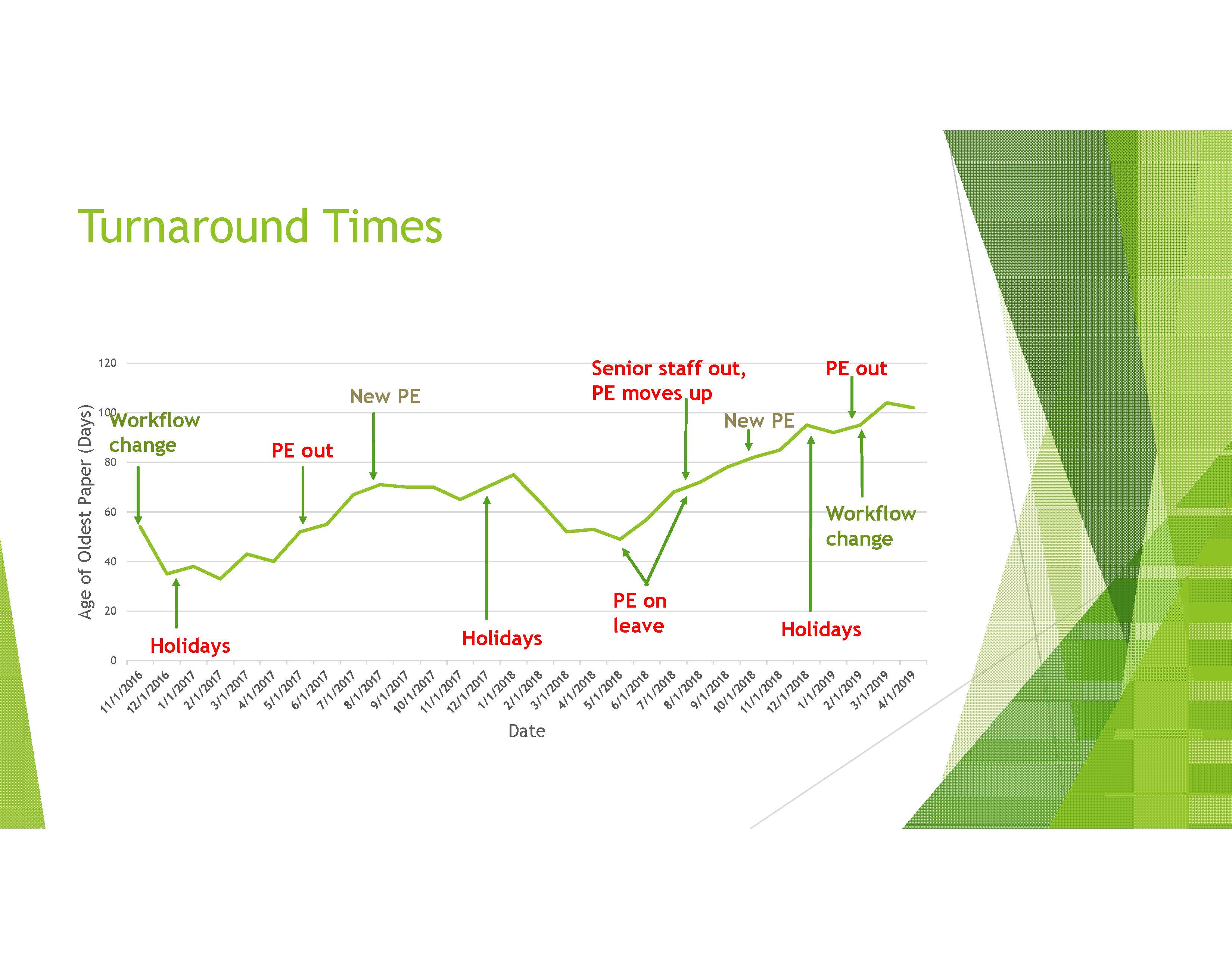

First, Heather DiAngelis of the American Society of Civil Engineers presented her case on a production backlog and long acceptance-to-publication turnaround times for the 34 journals she and her team manage. She began collecting volume and turnaround time data, then examined how the backlog and turnaround times changed as various workflow changes were implemented. When these two metrics were tracked and analyzed against mitigating factors, several culprits emerged and were easy to see in the data (Figure 1).

The first was holidays; the second was staff changes, not only team members leaving but also the “trickle down” effect of senior staff leaving and being replaced by team members promoted to fill that position. The impact of these events on both metrics—and the difficulty recovering from them—was easy to see as the backlog and turnaround times increased over the end-of-year holidays and when staff took time off or changed positions at the organization. Less easy to see in the data were smaller events, such as brief staff absences, the comparative competency of individuals, and changes in the attitude or motivation of staff, which could also affect the metrics.

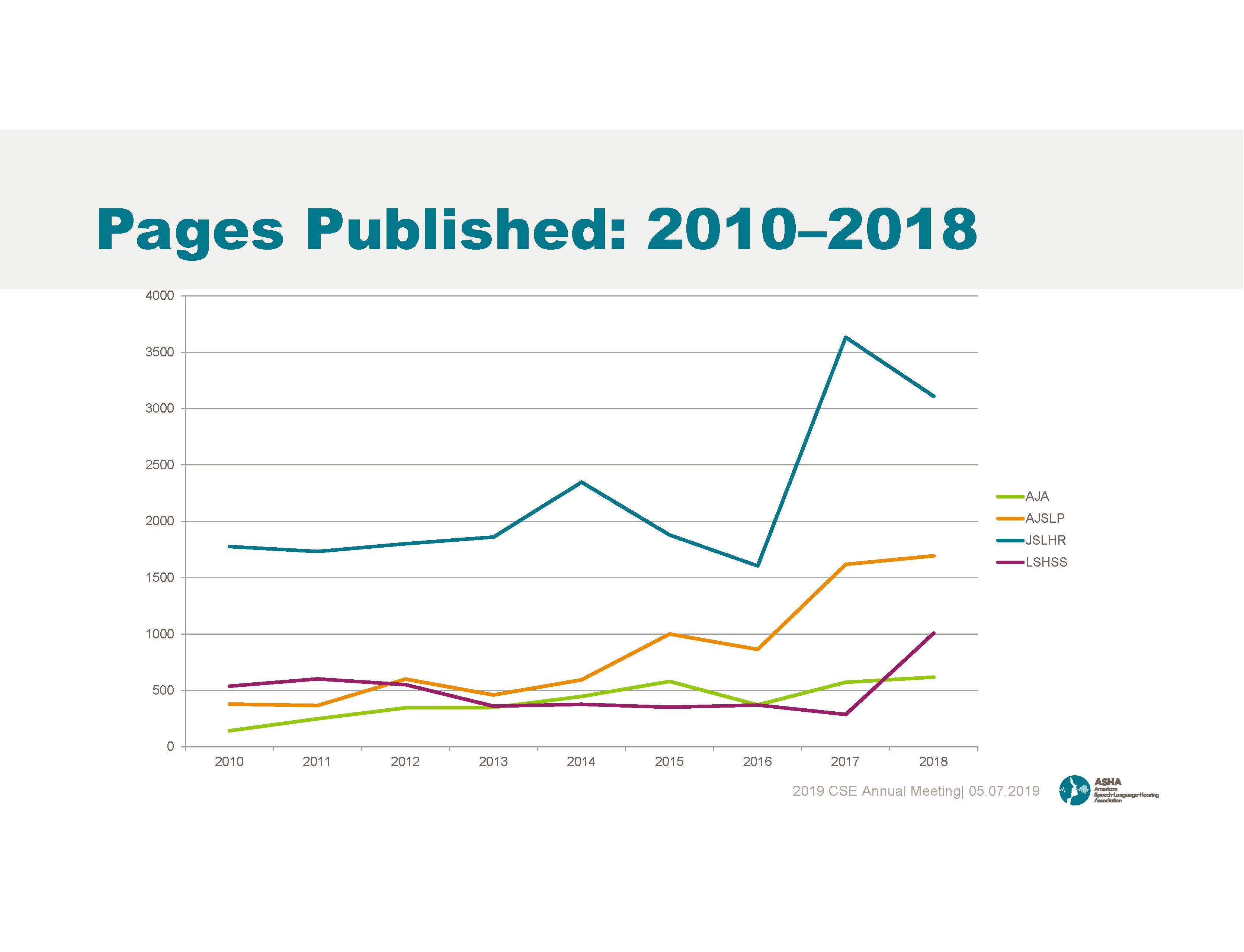

Next up was Victoria Koulakjian from the American Speech-Language-Hearing Association, who also used production data to examine workflow issues. In this case, an examination of days from acceptance to publication, task duration reports and article information identified an increase in volume (Figure 2) as well as other production-related challenges, such as static resources and staff levels.

Three main challenges were identified: too much time and energy being spent on copyediting and proofreading, production stalling when articles arrive with incomplete or incorrect materials, and competing priorities taking away from production tasks. The team tackled the first problem by revising their approach to editing by moving their copyediting to a company rather than using freelancers, reducing the number of rounds of review and query by sending author proofs at a later editing stage, and having their production editors focus on project management rather than on content. To reduce production backlogs due to articles missing materials, they provided clearer requirements to authors for necessary elements such as disclosures, copyright forms, figure quality, and better training for staff to identify problems and began holding articles back from starting the production process until all required materials where in hand. These interventions were successful, but created a new problem: times from acceptance to publication began to rise because articles missing items were being held. To address the final issue—competing priorities—the team put a focus on holding regular status meetings to identify and predict delays through review of workload reports and open, honest discussions of challenges.

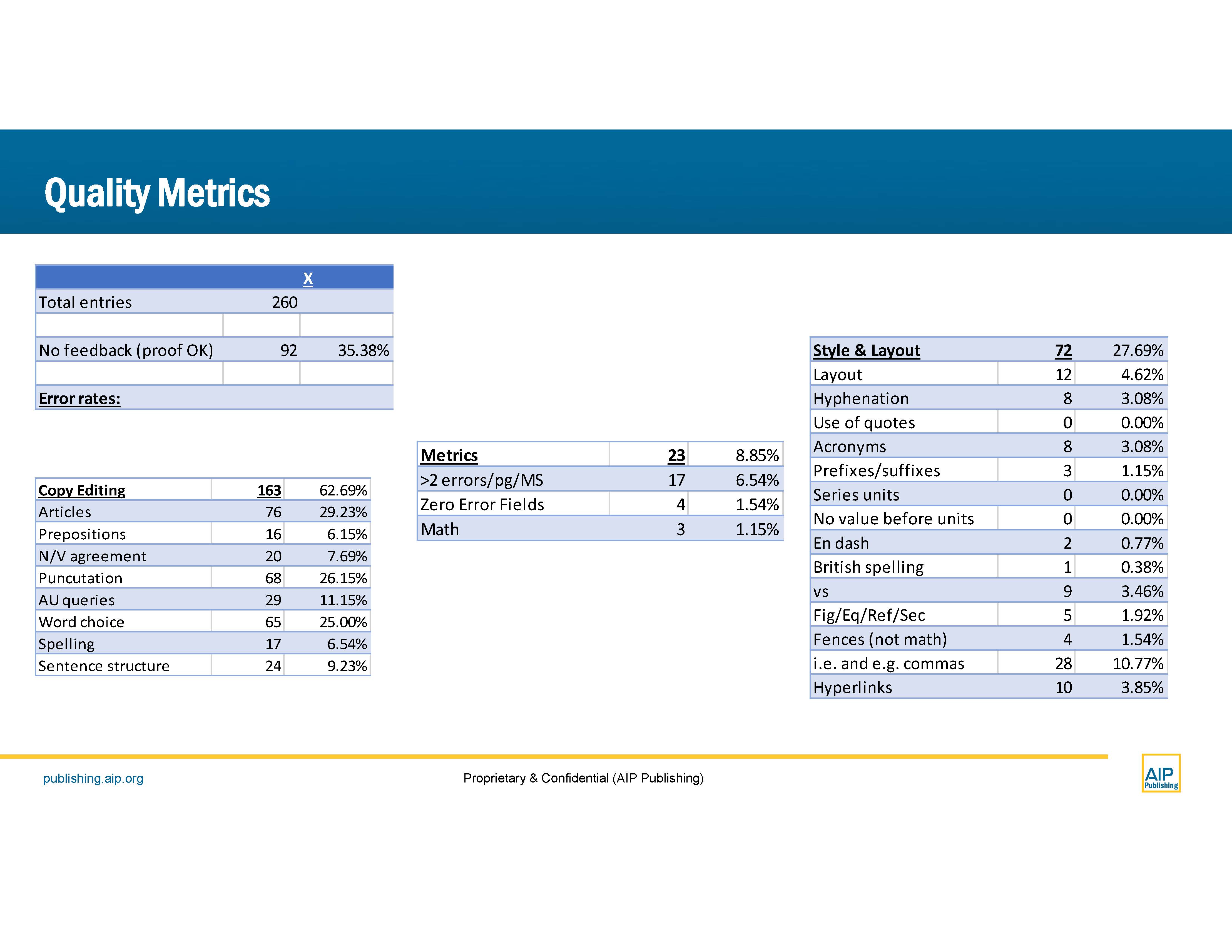

The last speaker was Theresa Fucito of AIP Publishing, who described an unexplained, daily production backlog, which the usual metrics said should not be there. She conducted an end-to-end review of their entire in-house process to address what tasks were being done, why tasks were being done, how tasks could be done differently and whether tasks required a human to do them. Staff were observed and asked questions about why a task was done a certain way. The team then assessed whether this was the ideal place in the process for this task and whether the best approach was being used. A number of ‘quick wins’ were identified, such as eliminating redundancies (e.g., copyright checks already completed earlier) and automating processes where possible (e.g., renumbering of references). They implemented a change in workflow that focused on a “touch-it-once” approach and, interestingly, asked staff to work on their production tasks FIRST during their day and address emails at the end of the day. This resulted in a time savings of 50 minutes per day per person. When it appeared that the copyediting and composition vendor was introducing errors, her team created a “quality metric” to assess and quantify those errors, which were then summarized and provided in a report to the vendor to inform areas that need improvement (Figure 3). The combined changes to their process resulted in a 16% improvement in author proof turnaround times.

Although some of the problems identified by speakers in this session were still in the process of being solved, production metrics clearly played a critical role in identifying the major contributors to problems. Metrics then assisted with pinpointing the causative issues and informing the most effective courses of action to correct the problems.

References and Links

- Friedman M. Using production metrics to track journals’ workflow. Sci Ed. 2018;41:63–64.